MEASUREMENTS AND ERRORS

Contents

MEASUREMENTS

There are several requirements that must be met if a measurement is to be useful in a scientific experiment:

The Number of Determinations

It is a fundamental law of laboratory work that a single measurement is of little value because of the liability not only to gross mistakes but also to smaller random errors. Accordingly, it is customary to repeat all measurements as many times as possible. The laws of statistics lead to the conclusion that the value having the highest possibility of being correct is the arithmetic mean or average, obtained by dividing the sum of the individual readings by the total number of observations. Because of time limitations, we often suggest you do a minimal number of repetitive measurements but remember this reduces the reliability and respectability of your results.

Zero Reading

Every measurement is really a difference between two readings, although for convenience, most instruments are calibrated so that one of these readings will be zero. In many instruments, this zero is not exact for all time but may shift slightly due to wear or usage. Thus it is essential that the zero be checked before every measurement where it is one of the two readings. In some cases the zero can be reset manually, while in others it is necessary to record the exact zero reading and correct all subsequent readings accordingly.

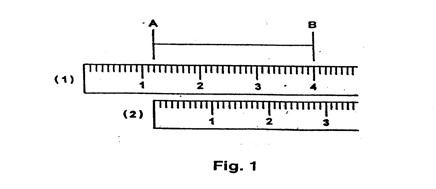

e.g. When measuring the length AB, Fig. 1, a ruler could be placed (1) with 1.2 cm at A, then length AB = (4.0 - 1.2) cm = 2.8 cm. The more usual ruler position (2) allows the length AB to be read as 2.8 cm directly, but remember this is still the difference between two readings: 2.8 cm and 0.0 cm.

Accuracy

Quantitative work requires that each measurement be made as accurately as possible. The main units of a scale are usually divided, and the eye can easily subdivide a small a distance of 1 mm into five parts reasonably accurately.Thus, if a linear scale is divided into millimeters, e.g. on a high quality ruler, a reading could be expressed to 0.2 of a millimeter; e.g. 4.6 mm, 27.42 cm, where 3/5 and 1/5 of a mm are estimated by eye. In cases where the reading falls exactly on a scale division, the estimated figure would be 0; e.g. 48.50 cm, indicating that you know the reading more accurately than 48.5 cm. But it would not be possible to take a reading with greater accuracy then 0.2 mm with this equipment. If the scale is not finely engraved, the lab meter sticks for example, it could probably only be read as 0.5 mm.

The accuracy desired from a measurement dictates the choice of instrument. For example, a distance of 4 m should not be measured by a car's odometer, nor a distance of 2 km with a micrometer. The student learns to decide which instrument is most appropriate for a certain measurement. Ideally, all measurements for any one experiment should have about the same percentage accuracy.

Significant Figures

A significant figure is a digit which is reasonably trustworthy. One and only one estimated or doubtful figure can be retained and regarded as significant in any measurement, or in any calculation involving physical measurements. In the examples in (3) above, 4.6 mm has two significant figures, 27.42 cm has four significant figures.

The location of the decimal point has no relation to the number of significant figures. The reading 6.54 cm could be written as 65.4 mm or as 0.0654 m without changing the number of significant figures - three in each case.

The presence of a zero is sometimes troublesome. If it is used merely to indicate the location of the decimal point, it is not called a significant figure, as in 0.0654 m; if it is between two significant digits, as in a temperature reading of 20.5o, it is always significant. A zero digit at the end of a number tends to be ambiguous. In the absence of specific information we cannot tell whether it is there because it is the best estimate or merely to locate the decimal point. In such cases the true situation should be expressed by writing the correct number of significant figures multiplied by the power of 10.

Thus a student measurement of the speed of light, 186,000 mi/s is best written as 1.86 x 105 mi/s to indicate that there are only three significant figures. The latter form is called standard notation, and involves a number between 1 and 10 multiplied by the appropriate power of 10. It is equally important to include the zero at the end of a number if it is significant. If reading a meter-stick, one estimates to a fraction of a millimeter, then a reading of 20.00 cm is written quite correctly. In such a case, valuable information would be thrown away if the reading were recorded as 20 cm. The recorded number should always express the degree of accuracy of the reading.

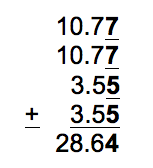

In computations involving measured quantities, carry only those digits which are significant. Consider a rectangle whose sides are measured as 10.77 and 3.55 cm (the doubtful digits are underlined here). When these lengths are added to find the perimeter the last digit in the answer will also be doubtful.

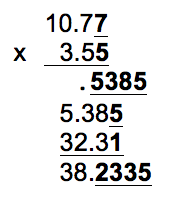

When the lengths are multiplied to obtain the area, any operation by a doubtful digit results in a doubtful digit.

ERRORS

A quantity measured or calculated by a scientists is only of value if he/she can attach to it quantitative limits within which he/she expects that it is accurate - that is, its uncertainty. An uncertainty of 50% or even 100% is a vast improvement over no knowledge at all: an accuracy of ±10% is a great improvement over ±50% and so on. In fact much of science is directed toward reducing the uncertainties in specific quantities of scientific interest.

The uncertainty in a reading or calculated value is technically called on error. The word has this precise meaning in science and carries no implication of mistake or sin.

Systematic Errors

- systematic error is one which always produces an error of the same sign. Systematic errors may be sub-divided into three groups: instrumental, personal and external. Corrections should be made for systematic errors when they are known to be present.

- Instrumental Error is caused by faulty or inaccurate apparatus; for example an undetected zero error in a scale, an incorrectly adjusted watch. If 0.2 mm has been worn off the end of this ruler, all readings will be 0.02 cm too high.

- Personal Errors are due to some peculiarity or bias of the observer. Probably the most common source of personal error is the tendency to assume that the first reading taken is correct. A scientist must constantly be on guard against any bias of this nature and make each measurement as if it were completely isolated from all previous experience. Other personal errors may be due to fatigue, the position of the eye relative to a scale, etc

- External Errors are caused by external conditions (wind, temperature, humidity, vibration); examples are the expansion of a scale as the temperature rises or the swelling of a meter stick as humidity increases.

Random Errors

Random errors occur as variations which are due to a large number of factors. Each factor adds its own contribution to the total error. Resulting error is a matter of chance and, therefore, positive and negative errors are equally probable. Because random errors are subject to the laws of chance, their effect in the experiment may be lessened by taking a large number of observations. A simplified statistical treatment of random errors is described in Appendix A of this manual.

The Error Interval

If it is not practical or possible to repeat a measurement many times, the errors in measurement must be estimated differently.

Since the last digit recorded for a reading is only an estimation, there is some possibility of error in this digit due to the instrument itself and the judgement of the observer. Hence, the best that can be done is to assign some limits within which the observer believes the reading to be accurate.

A reading of 6.540 cm might imply that it lay between 6.538 and 6.542 cm. The reading would then be recorded as (6.540 ± 0.002 cm). The scales on most instruments are as finely divided by the manufacturer as it is practical to read. Hence, the error interval will probably be some fraction of the smallest readable division on the instrument; it might be 0.5 of a division, or perhaps 0.2 of a division. The error interval is a property of the instrument and the user, and will remain the same for all readings taken provided the scale is linear.

Remember that measurement of a quantity (such as length) also involves a zero reading, so the error in the quantity will be twice the reading error. Note that it is essential to quote an error with every set of measurements.

Absolute Errors

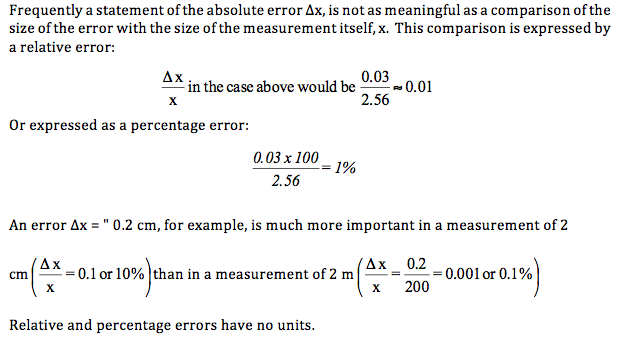

The estimation of an error interval gives what is called an "absolute" error. It has the same units as the measurement itself; e.g. (2.56 ∓ 0.03) cm.